Systems - Scaling

Clusters, parallelisation and distribution

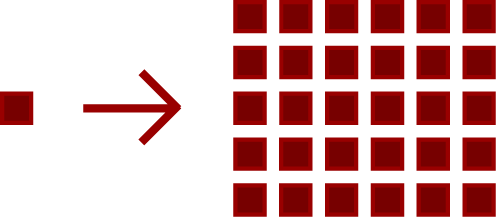

Parallelisation

Before attempting to introduce clusters and distribution, it is important to ensure that the algorithms can be parallelised. Modern processors are capable of performing multiple tasks at the same time. Thus, the first step is to make your programs able to utilise more than a single thread, which enables for parallelisation. This is a prerequisite for distribution. We are able to help you evaluate your algorithms and methods to deduce if they are already capable of running parallelised, if they need to be adapted, or if they unfortunately are restricted to running in a serial fashion.

Clustering and Distribution

If your challenge is larger than can be handled on a single computer, it may be time to scale out. We can assist with architecture and administration of High Performance Computing (HPC), High Throughput Computing (HTC), and other varieties of clusters.

We can help you with:

- Physical clusters

- Dedicated machine clusters

- Ad-hoc clusters

- If your computing needs are modest, you can utilise your office computers during off-hours. This provides you with a cost-effective alternative to dedicated machines.

- Amazon Web Services (AWS)

- MIT StarCluster

- Custom solutions